服務流程

蘊域科技團隊,主要分為系統整合,施工安裝以及規劃設計三大團隊,由規劃設計團隊與業主對接了解需求,為客戶策劃出人性化且符合業主生活習慣的智能設備規劃,再對接系統整合團隊,將設備運用軟體串接為客戶設計最完整的系統以及介面,最後再交由施工團隊把系統設備安裝到場域內,致力為業主打造出便捷,高品質,高效能的智能居家生活。

規劃丈量

蘊域規劃設計團隊的職責如下:

1

蘊域科技的智能系統規劃師理解客戶的需求和生活習慣,專為客戶量身打造最貼切的智能居家系統。

2

制定智能設備方案以符合客戶需求。這包括進行場地測量、佈線規劃、燈光設計、環境控制和安防管理等各項細節,確保每一個方案都能完整的符合業主的期望。

3

蘊域規劃師負責規劃智能系統設備的佈局和整體結構,確保系統各部分協調運作,實現其最佳性能。

4

在驗收階段,我們的規劃師將逐一檢查每項設備的運作狀況,確保一切正常運作。如此,在交付給客戶前,我們能夠提供最完整的智能居家體驗。

產品整合

產品系統整合團隊的職責如下:

1

我們擁有多種智能品牌的經銷代理權,這使我們能夠整合不同品牌的設備,並將各大品牌的家電控制納入我們的系統,為客戶提供最適合的產品組合方案。

2

我們密切與規劃設計團隊協作,以深入了解他們的設計方案。

3

我們負責軟體開發,以實現各種智能設備之間的互通性,從而建立完整的智能居家系統。

4

我們設計使用者界面,以讓客戶輕鬆控制智能設備。

系統安裝

施工安裝團隊的職責如下:

1

蘊域科技擁有專業工程師團隊,接收產品系統整合團隊提供的方案,並與施工團隊密切協調,以滿足規劃和客戶的需求,以及確保在水電階段做好線路預留工作。

2

施工安裝團隊負責於第二次水電施工階段進行設備和系統的建置,同時進行檢查和驗收,以確保所有設備都被正確安裝且能夠正常運作。如此,當客戶入住時,可以享受到最完美的智能居家體驗。

Reimagining Sight: Perception Beyond Frames with Event-Based Vision

An Event-based Vision Sensor, often referred to as an event camera or neuromorphic camera, is a specialized type of image sensor designed to mimic the behavior of the human retina and provide a fundamentally different way of capturing visual information compared to traditional cameras. Diverging from the fixed-rate frame capture mechanism of traditional cameras, EVS operates based on the concept of "event-driven" data capture. Instead of capturing entire frames of images, EVS only responds to significant changes in the scene, such as alterations in luminosity or motion patterns, effectively reducing power consumption, extending runtime, and data processing load. The remarkable frame rate of EVS empowers it to adeptly capture swift motion and fast-changing scenes with superior efficacy when compared to conventional cameras. Demonstrating exceptional capability, EVS excels in capturing events occurring within mere microseconds.

Event-Based Vision Sensor: Where Vision Meets Versatility

Detection & tracking

Event-based vision sensors excel in human body detection and object tracking by capturing dynamic scene changes and meticulously analyzing the spatial and temporal patterns of these alterations to discern the presence and movements of individuals. Their attributes, including energy efficiency, low latency, and adaptivity to different lighting conditions, render them exceptionally well-suited for a range of applications, including security, robotics, and human-computer interaction.

Anomaly detection in industrial automation

Event-based vision sensors play a pivotal role in industrial automation by swiftly detecting dynamic scene changes, enabling proactive responses to prevent or mitigate issues. These sensors enhance early and efficient anomaly detection while conserving energy and data resources, thereby contributing to heightened productivity, quality control, and safety across manufacturing and industrial processes. Furthermore, their integration with AI/ML algorithms elevates anomaly detection accuracy, allowing seamless adaption to diverse industrial environments over time.

Mobile

The fusion of “images” and “events” introduces the swiftness, efficiency, and excellence of neuromorphic-enabled vision into mobile devices. This advancement significantly augments the existing camera functionalities, particularly in the context of capturing high-speed dynamic scenarios (e.g., sports events) and in challenging low-light environments, courtesy of its pioneering approach in event-based continuous and asynchronous pixel sensing approach.

Automotive

VoxelFlow™ is co-developed by Terranet AB and Mercedes-Benz, equipped with Prophesee Metavision® event-based vision sensors. It enhances the existing radar, LiDAR, and visual systems in accident-prone areas within a range of 30 to 40 meters, enabling fast and accurate understanding and prediction of road conditions for autonomous driving (AD) and advanced driver-assistance systems (ADAS)

AR/VR

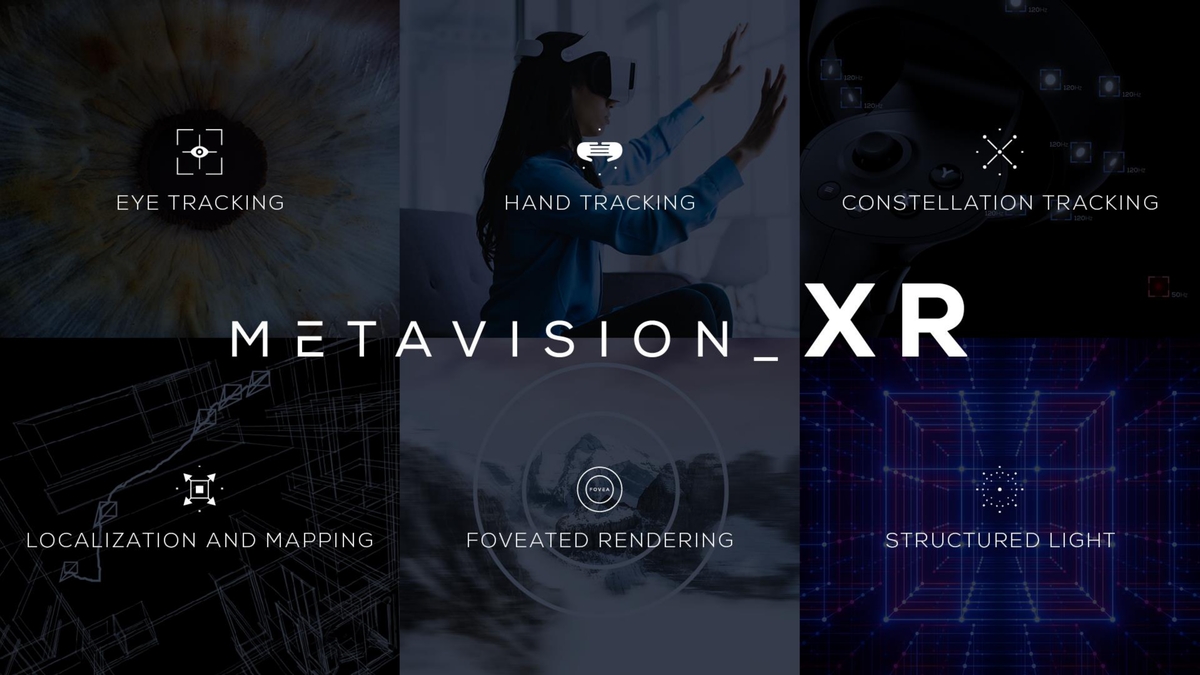

Event-based vision sensors exhibit an exceptionally high sampling rate (theoretical values of up to 10 kHz), an expansive dynamic range, low power consumption, and minimal data volume. These attributes render them highly valuable within the realm of virtual reality (VR), encompassing applications such as 3D SLAM, gesture control, and eye tracking, among others.

解決方案

蘊域科技提供全方位的智能解決方案,為不同場域需求提供客製化的系統整合服務,從規劃設計到系統建置,為客戶打造最適合的智能環境。

智能居家解決方案

為現代家庭提供完整的智能居家系統整合服務,打造舒適、安全、節能的居住環境。

1

整合智能燈光、空調、窗簾等環境控制系統,實現一鍵式場景控制。

2

建置智能安防系統,包含門鎖、監控、感測器等全方位安全防護。

3

整合影音娛樂系統,提供沉浸式的家庭娛樂體驗。

4

客製化智能場景設定,根據生活習慣自動調整環境參數。

商業空間解決方案

為商業空間提供專業的智能系統整合服務,提升營運效率與客戶體驗。

1

智能會議室系統整合,包含影音設備、環境控制、預約管理等完整功能。

2

智能照明與能源管理系統,優化能源使用效率,降低營運成本。

3

整合門禁、監控、消防等安全系統,確保商業空間安全無虞。

4

提供遠端監控與管理平台,隨時掌握空間狀態與設備運作情況。

醫療照護解決方案

結合先進的AI影像技術與智能系統,為醫療照護場域提供安全、高效的解決方案。

1

整合EVS事件型視覺感測器,實現非接觸式跌倒偵測與行為監測。

2

建置智能照護系統,包含緊急呼叫、環境監控、用藥提醒等功能。

3

整合熱成像溫度偵測技術,提供高精準度的健康監測服務。

4

遠端照護平台整合,讓家屬與照護人員隨時掌握長者健康狀況。